Exploring Patterns

This post is part of a 3-subseries in which we design the high-level architecture for Cryptomate.

- Modules and components.

- Exploring patterns (this post).

- Critical points.

Evaluating architecture patterns

Given the task at hand, we can quickly trim down the options to a few candidates:

- Event-driven architecture.

- Hexagonal architecture.

- Micro-services architecture.

- Micro-kernel architecture.

We will explore, evaluate and eventually select one of them.

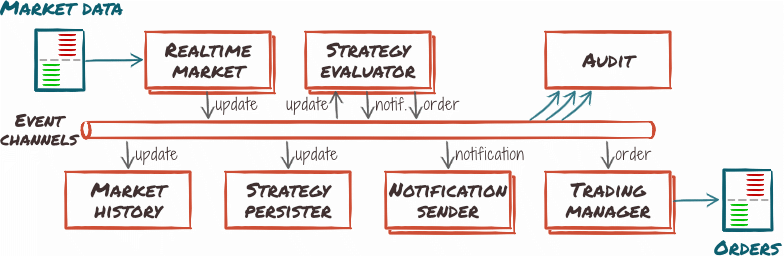

Event-driven architecture

This architecture pattern revolves around reacting to events by orchestrating the workflow as a chain of events flowing through event processors, under possible supervision by a mediator.

It enables asynchronous, distributed workflows, which could power Cryptomate:

- Initial events would come from market data providers.

- Components would forward messages using event channels as it goes through logic layers.

- Outgoing notifications and orders would be events down the line.

The main selling points of this architecture pattern are excellent agility and deployment, as event processors are standalone and self-contained, and good performance through scalability as processors can be instantiated several times over new resources to accommodate demand.

This comes at the cost of difficult development and testing. Failures in event processors are tricky to diagnose and the fully asynchronous nature of the workflow is hard to reason about. As for testing, testing single event processors is easy, but end to end testing is hard.

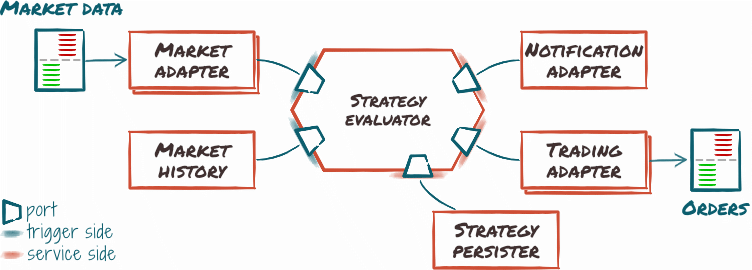

Hexagonal architecture

This architecture pattern, also called the ports & adapters pattern, designs the system by defining a bound between the inside and the outside of the application. Inside is the business logic, working in an abstract environment. Every domain-level feature or functionality it requires is defined by a port, that is, an abstract slot in the application. On the outside, adapters support specific ports, allowing one to “plug” an external service onto the port, providing the feature.

By encapsulating all communications, this pattern facilitates interchangeability of both input and output channels. This could power Cryptomate like this:

- Strategy engine would be business logic, sitting at the heart of the system.

- Interactions with data sources and event sinks could be modelized as ports and adapters.

- Interactions with trading platforms could be modelized as ports and adapters as well.

The main selling points of this pattern are maintainability and testability. By enforcing that all communications go through ports and adapters, this pattern makes it easy to adapt the system to changing third parties. Say, supporting new brokers. This can be leveraged to test components by providing them adapters that feed them test data and trace their reaction.

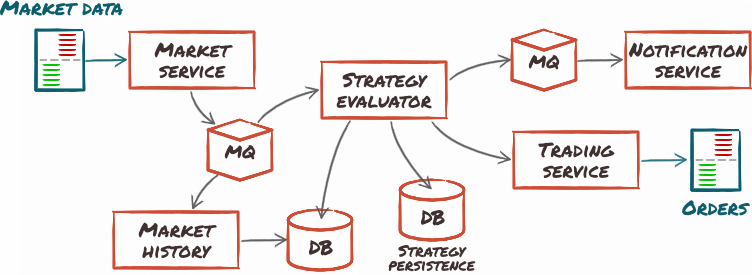

Micro-services architecture

This architecture pattern comes as a simplification of the famous Service-Oriented Architecture or SOA, dropping orchestration and complex routing in favor of a lightweight message broker, or no broker at all.

Micro-services revolve around organizing the application as a set of small, standalone, independently-deployed service components. Each component performs a self-contained business function, which it exposes using a standard interface.

Cryptomate could be designed as a set of micro-services like this:

- Market data collection would be a set of third-party-specific service components.

- Trading platform communications would be handled by a service component.

- Strategy engine would be a service component as well, and would benefit from the architecture in that it would naturally scale, new strategy instances requiring no more than starting additional engine instances.

The main selling points of this pattern are scalability and continuous delivery, as service components can be easily multiplied to handle increasing load, and their independently-deployed, self-contained nature allows incremental deployment. Testability is excellent too, as each service component can be tested in isolation.

On the other hand, being a distributed architecture, it shares the caveats of complexity of event-driven architectures. Performance also tends to be poor, due to tasks requiring a lot of communications to distributed nodes. This can be counter-balanced by careful implementation, but does not come naturally with the pattern.

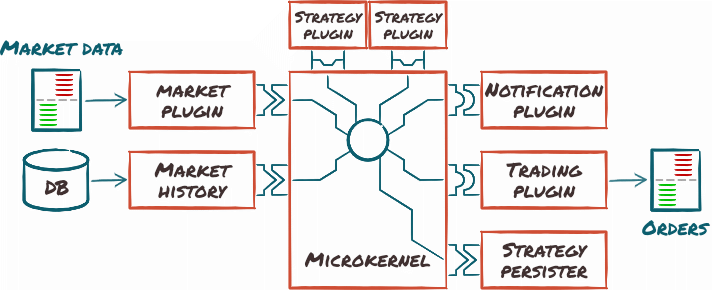

Micro-kernel architecture

This architecture pattern revolves around creating a basic core system, which is then augmented with plugin modules to create a fully functional system. The core system acts as a simple framework, capable of locating plugins and managing them. It typically has but a very simple knowledge of business logic, delegating all details to the plugins.

Cryptomate could be designed as a micro-kernel like this:

- The core would only know about finding market plugins, strategy plugins, notification plugins and trading plugins, and the basic outline of the workflow: markets produce events that go to strategies, which may take actions.

- Market plugins know how to receive data events.

- Strategy plugins fill in the actual trading logic.

- Action plugins include both trading orders and notifications.

The main selling points of this pattern are excellent agility and testability. By adding and removing plugins, the system can easily adapt, and plugins can be tested in isolation.

On the other hand, this pattern does not address scalability at all, as the run-time is monolithic even though its design is not. Also, designing a solid plugin system, capable of handling the diverse contracts that may apply to all the facets of the business logic, is difficult.

Picking and compositing

Summary

Here is what we have so far:

| Attribute | Event-driven | Hexagonal | Micro-services | Micro-kernel |

|---|---|---|---|---|

| Testability | ||||

| Maintainability | ||||

| Ease of development | ||||

| Performance | ||||

| Availability | ||||

| Scalability |

Quality Attributes are ordered from the most valued by our requirements to the least valued. I intentionally left out quality attributes that are mostly unaffected by this choice.

We can clearly eliminate the event-driven architecture immediately.1 Now, the hexagonal model seems like a good fit, but is lacking on the availability and scalability side. Also, it does not provide a model for abstracting out business logic, which we need to build a versatile strategy engine.

Compositing

We could alleviate those weaknesses though, by:

- Applying some micro-service concepts to specific areas. In fact the very nature of the

hexagonal pattern makes it easy to detach a single module as a micro-service, without

touching anything but the matching port and adapter parts.

This will allow us to increase scalability and availability of specific modules, with controlled performance impacts (we know the impact is limited to flows going through this specific adapter). - Using micro-kernel principles on the strategy evaluator module, turning it into an empty workflow that delegates to strategy plugins.

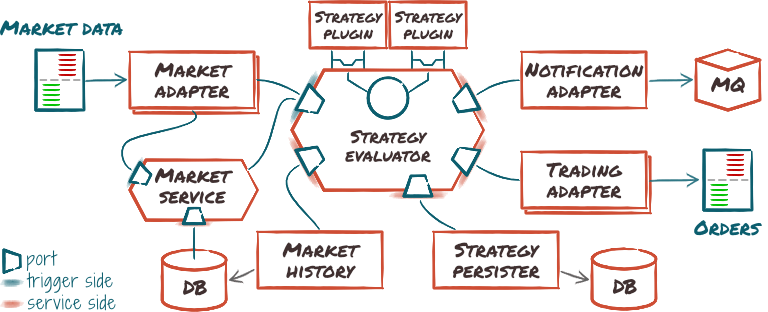

Here is a tentative high-level design leveraging this combination:

This gives us a hybrid architecture: an hexagonal architecture where some adapters use micro-services. Thus, the performance/scalability trade-off can be postponed to integration-time, making our system equally potent at servicing large-scale farm of strategies and performance-critical, tailored, single-user setups.

Conclusion

As a last step, we should double-check with our requirements that this tentative architecture has a straightforward path for all of them. For instance, adding support for a new market data provider can be done by creating a new adapter, which, in a properly structured hexagonal pattern, will not change existing code.

I did check all of them, feel free to do it on your side as an exercise, and send me feedback if you find I missed something.

We created our initial idea of the system's architecture. We validated all known scenarios fit into our idea. There are still a few critical areas that need to be refined.

-

Note the event-driven model is a bad fit mostly because I am doing this project alone, which pushes ease of development and testability high up the list; a larger team would probably overcome those difficulties and pick an event-driven model for its performance.

↩